Real user monitoring (RUM) has many benefits, providing vital information about the user experience directly from the source. However, it is a reactive tactic, only yielding benefits once code is delivered into production.

Performance testing should be used to complement RUM. Here are three reasons why:

- Performance testing is proactive

- Performance testing is controlled

- Fixing defects is easier in test

1. Performance testing is proactive

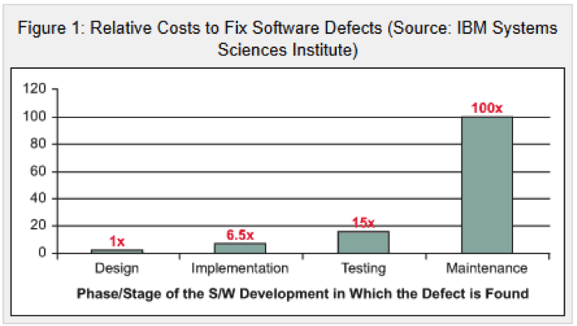

Even if the roll out plan for a change is gradual, the cost of identifying and fixing software defects in production far outweighs the cost of proactive performance test.

Some performance defects only materialise under certain conditions, such as certain levels of load or after a period of time has passed. Therefore, measuring the performance impact of changes in production only is an unacceptable risk. It can result in the the most valuable or important groups of users suffering poor user experience

2. Performance testing is controlled

There are many factors that can cause poor user experience, and not all of these are within your control; e.g. the user’s bandwidth or the user’s machine specification. The findings from RUM will be affected by all of these factors, resulting in a high likelihood of “false positives” (poor user experience that can’t be fixed).

Alternatively, using a controlled performance test means that you decide which variables change, e.g. browser type, machine specification, etc. This allows you to reliably compare new code to previous baselines. In turn, this leads to easier go / no-go decisions and faster defect investigations.

3. Fixing defects is easier and quicker in test

RUM collects data and presents it back to the analyst, after the page load has occurred.

It is easier to diagnose a client-side performance defect by inspecting a page load in real time – observing the timing of each page component and measuring user experience KPIs. We use our UX testing software for this purpose.