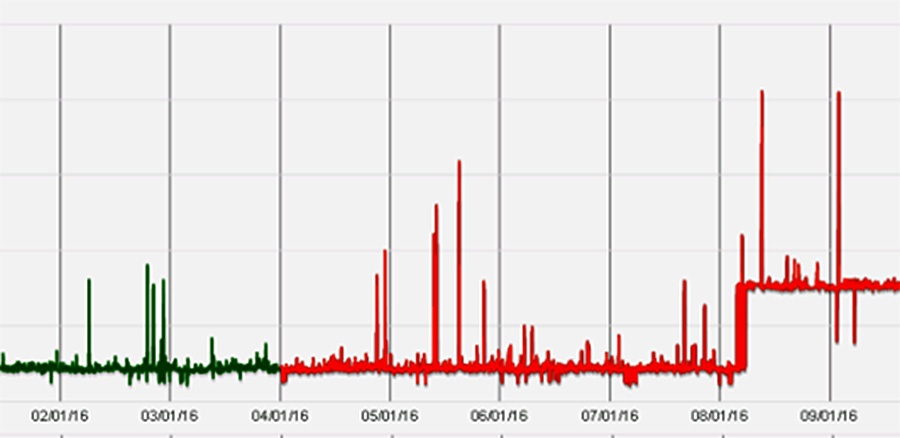

If you have some form of user response time monitoring in place for your website you may often see step changes in response times. Perhaps by a few seconds, maybe by more. Here’s a real world example:

This often happens because a retail business unit has responsibility for the product information, such as images of the products. This is right and proper, but it does bring complications. In our experience at Capacitas, this is very often down to simple errors, such as using poorly compressed images. So why does it keep happening?

- The IT department sees response time information, but is not responsible for the content.

- And the team responsible for the content probably doesn’t see the response time information.

To put it another way, if the IT department don’t own the problem, they won’t be able to fix it. And the people who do own the content are unaware of the problem, or perhaps unaware that it is a problem they are responsible for.

What are the business drivers that give rise to these issues? Well, business areas will probably start with the position that they want the best photo possible of the products. They want to show the goods looking great, but this can mean large lightly compressed images.

Does it matter in these days of fibre optic high speed broadband? Most definitely yes. A large proportion of customers, (over 50% for some of our clients), will be using their phones to browse, perhaps while using public transport. And if your site is unresponsive, your customers are always just one click away from becoming someone else’s customers.

We have often seen image files that run into several megabytes, and these will completely dominate the response times.

How may this be addressed?

There are two clear lines of attack, and it would be wise to use both of them.

1. Check before the content goes live.

It is straightforward to get a list of image file sizes, and set suitable thresholds. By embedding this step in your release procedures, you can intercept any large files before they go live.

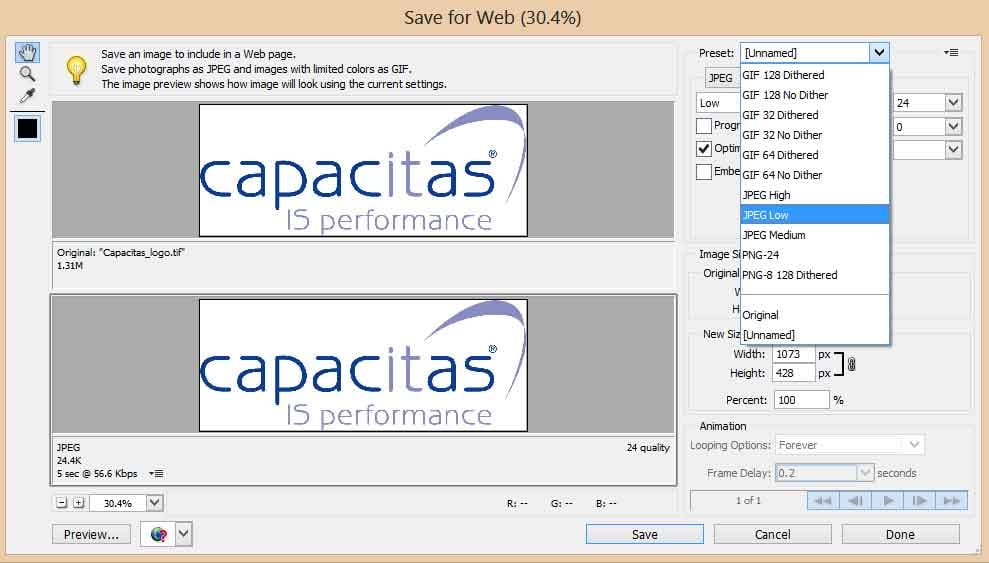

Any good image editing program, including Photoshop and Photoshop Elements, will have a “Save for Web” option, with preview. This is specifically designed to get the best balance between file size and image quality.

In the example shown, it is difficult to see the difference between a 1.3 Megabyte TIF image, and a 24 kilobyte Jpeg version.

2. Monitoring your live site.

Either in the same way, looking for large files, or via response time monitoring services – and you need to make sure that any anomalies are reported back to the people responsible for content, and not kept inside the IT department!

You may be spending very large sums of money on hardware to give your customers a great experience, when a simple compressed save of an image files would deliver much more cost effective improvements. And however you go about it, be sure that you have a mechanism for detecting these issues, AND an owner for any issues found.

A problem with no owner is very rarely fixed!

Do you need help?

Capacitas have the experience, expertise and in-house tools to help you ensure consistently good performance for your customers. We can detect potential problems before they breach any thresholds, load test your live system to give you confidence, and apply Performance Engineering at the development stage, so performance problems never reach your live site in the first place.

If you would like to learn more about our Modelling and Performance testing solutions, please click below, to see our latest webinar.