HP LoadRunner is a tool which aids in creating automated test scripts for analysing a system under various loads. These scripts are designed to serve a particular purpose e.g. register a user. With requirements clearly defined, and a script which encapsulate correct actions to simulate the steps needed, testing can begin.

In a commercial environment, we can say that, for example, a system has approx. 50 concurrent visitors at any given time throughout the day. What this doesn’t tell us is where on average that user is spending most of their time. Are their ‘actions’ on the system resources expensive? Are they downloading a lot of information? This is where we must look at resources beyond HP LoadRunner to also monitor response times, network bytes transferred, etc. This information is integral to the type of testing that needs to be carried out. After all, any type of testing (not just performance) should attempt to emulate a real-world scenario.

Test Scenarios

The types of tests carried out range from Soak, Ramp, Single Transaction (STR), and more. Each of these carries with them their own benefits and should be used with particular intentions. These examples are based on the testing of a particular web application for one of our performance testing service clients:

Soak: 100 virtual users (vUsers) at 50% of peak load for 12 hour duration with the aim to identify any bottlenecks in the application or the environment which supports it. Such bottlenecks we look out for here may include; session timeouts, memory (available bytes), contention, etc.

Ramp test: 100vUsers total, 10 minute ramps with 20 vUsers at each step. With more than one load injector, we shall distribute the load. Here our intention is to increase the number of vUsers at each step but still perform the same actions throughout the duration of the test. A trade-off we may be looking for here is what we see in our (passed) transactions per second (TPS) against our response times at each step. A general rule of thumb is if we increase our think-time (lr_think_time(1);) we can achieve higher concurrency and vice-versa. On the other hand, lower think times may allow for higher throughput, but instead we may see signs of process queuing/ inefficient garbage collection/memory contention.

STR: Test consisting of 4 scripts each adding some additional functionality. NB: ‘scripts are designed to fulfil a particular purpose’. In our STR we will go up to the Purchase script. Therefore, our scenario now consists of:

- Browse Products

- Browse Products, Add product/s to cart

- Browse Products, Add product/s to cart, Login

- Browse Products, Add product/s to cart, Login, Purchase

Each script/stage has 50 vUsers for duration of 12 minutes and 30 seconds, which includes a ramp-up period of 1 user every 3 seconds – this is a period of loading a set of data so that the cache gets populated with valid data.

There is also a 7 minute and 30 seconds delay between each stage. This is to separate out our steps to help us with our analysis from the performance monitor logs we capture in our tests.

As you would have read up to now, I have mentioned a keyword; Scenario.

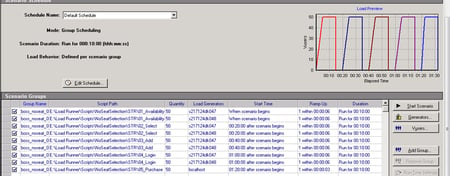

Scenarios are created where we want to load a test which we know will be used more than once. This could be for a regression suite, or even for debugging purposes. For an STR test, you can see from the image above that there are 5 scripts in the scenario. There are three distinct load injectors (generators). We create a scenario from the above test to ensure that we don’t have to repeat the set-up process each time. The only thing to modify would be the schedule times, results name, and to ensure that our data files are still valid (see VUGen).

“A script is not always made as a stand-alone test, but can be run as one if necessary”.

This is the case in our STR test. There are separate scripts for; Availability, Select, Add, Login, and Purchase. All of which can be ran independently but at the same time, and all can be ran in the same (STR) test.

Test Failures

Running any of these test types is good and well, but what do you do when you begin to see failed transactions in your run? First thing to ensure is that when creating your scripts all transactions are concise and correctly defined using the following tags:

lr_start_transaction("Availability_Search");

lr_end_transaction("Availability_Search ", LR_AUTO);

This will be one of the early steps in identifying which transactions are experiencing failures. Having said that, ensure the code release your application is now running on is functionally tested before running your load tests. You should also check this yourself once you have deployed a new release by going into the application and manually going through the steps outlined in your scripts e.g. make a purchase. This should be part of your organisation’s best practice so that each time you can follow the same process when diagnosing such failures so that you know they lie in the applications functionality without having to run a load test. This form of sanity testing should be applied before you go ahead and raise any defects. Remember, you may experience failures in your test runs, but you must be able to:

1) Replicate these failures

2) Prove when and where they occur

3) What performance monitor counters show degradation in performance

Sometimes failures in your test can lie within your script or even the data files it is reading from. Let’s say we have our base URL of http://www.mywebsite.com/{region} where region is a variable which points to our data file (.dat) regions.dat. In this regions.dat file, we may have 3 entries of: EN, IT, ES which represent English, Italian, and Spanish – all of which are different language versions of our website. We may have been told (by word of mouth) that in our Italian version (IT), the ‘browse by product type’ step may not be functioning correctly. The output in our Controller tells us this is the case by the increase in number of failed transactions; however what we may have overlooked was to:

a) Remove entry ‘IT’ from our data file or

b) Communicate to the rest of our team that we expect to see a higher than normal failure rate in this test

Effective communication also helps in troubleshooting our failures. When working in large teams, it needs to be clear who is responsible for documenting any comments before running a test, and for following up on failures above our baseline threshold.

If we opted for option a) above, then we would need to:

- Remember to put this entry back into the file when the issue has been fixed

- Create a new file called regions_20121227.dat for example which now conforms to a standardised file naming convention.

Whichever option you choose, be aware that manual intervention /inspection is always vital. HP LoadRunner may be a high automation tool, but it still needs someone who knows how to drive it!