Last week I noticed this headline on BBC News: 'Smarter' South East airport capacity needed. Having a natural interest in capacity, and also airports, the title sounded intriguing – what does the BBC actually mean by ‘smarter’ capacity? The focus of the news item was on a report by the London Assembly which has pointed out low utilisation of runway capacity at some of London’s airports, highlighting Stansted as being only 47% busy during 2012. Heathrow, on the other hand, was 99% busy. A commission is currently looking into options to expand capacity, and also to ‘identify ways in which we can make even better use of the capacity that already exists’.

One minor point which appears is interesting to note in passing – the ease with which capacity statistics can be misread by the audience. The BBC report states that Stansted was only 47% busy – actually the press release states that its capacity is 47% free. Only a small difference as it happens, but…

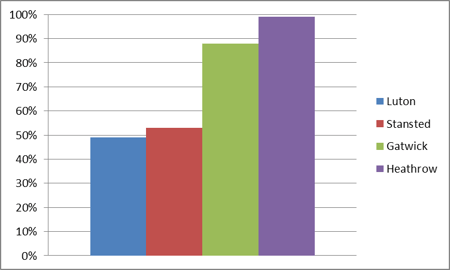

Anyway, here are the utilisation figures as presented by the London Assembly:

The question that immediately arises is what should the utilisation be? And here is the interesting difference between those who provision airports and those who provision servers and data centres. The implication of the study and the news report is that in the 3 airports that are not 99% busy there is wasted space, and that we need to find ways of better utilising them to maximise their full potential. The target is for the airports to be as busy as possible and the closer to 100% the better; that way more passengers can be flown without having to procure more airports.

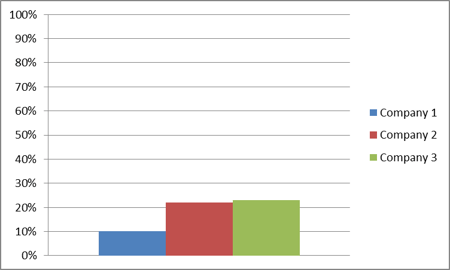

Here on the other hand are the average CPU utilisations of some data centres and systems taken from three companies we have worked with recently:

This is utilisation at peak time across all systems! And these are not exceptions – have a look at the servers in your own organisation and you may well find this figure is around the 20% mark. Why is server CPU usage typically so low? On distributed platforms the idea is prevalent that the lower the utilisation the better. It’s when the CPU gets high that we have problems and things run slow, right? We have a monitoring tool, perhaps, which every time the CPU passes 70% fires off an alert or worse still an incident. And so conversely the lower the CPU usage the better and we look at a chart like the one above and breathe a sigh of relief.

There may well be several factors which mean that the ideal CPU utilisation is not 99% on every server, in fact that is rarely the case:

- Transaction driven systems will pass a point of utilisation where the response time of individual transactions becomes unacceptable – causing performance problems

- There may be a need to provide failover and backup for disaster recovery – assuring the availability of the system is acceptable

- There may be seasonal peaks or planned future growth in business demand which require more capacity than the current peak – need to ensure planned business demand can be processed

All of these may mean that 99% is not a good target to aim at. However they don’t mean that there is no target to aim at. There is a level – maybe 99%, maybe 70% – where performance becomes unacceptable. There is a required level of availability, which can be met by a set amount of headroom for failover. There is a certain amount of business demand that needs to be processed. Maybe when you take all these together it turns out that the servers shouldn’t be running at much above 50% at peak. But one thing we can learn from our friends with the airports; they shouldn’t be running at much under 50% either.

When servers are over capacity this can cause incidents, failures and system unavailability. When they are under capacity something happens which is becoming more of a problem to organisations in the current economic climate – money is wasted. It seems OK when it is just one server, or just ten servers, but when this is across a large organisation, maybe with thousands of servers, millions of pounds are being wasted. Capacity planning has to be a balancing act between performance, availability and demand on one hand, and cost on the other. This is one reason when at Capacitas we are involved in setting server thresholds we never just set red and amber upper thresholds, we also set a lower threshold (usually blue!) which the CPU peak should not go below. We have been finding it more and more common for organisations to request our assistance not mainly to solve typical capacity ‘issues’ but to help them with the underlying issue of having too much expensive capacity which is sitting there doing nothing.

In the end the difference between provisioning airports and servers probably comes down to one main factor: servers are a lot cheaper than airports. Even when there are thousands of them. But many organisations are still sitting on potential millions of savings which they could achieve simply by doing some proper capacity planning.