Cloud based IT services are increasing in popularity due to flexibility in capacity delivery and the reduction in capital expenditure.

However a challenge many organisations experience is that cloud costs become uncontrolled.

Fortunately there are 5 ways to deliver cloud cost control:

- Use reserved instances, where possible

- Ensure that instances are fully tagged

- Plan for all of the Seven Pillars of Performance

- Keep tight control of non-production environments

- Predict when scaling is required

Download your Guide to Cloud Cost Optimisation in AWS

1. Use Reserved Instances, where possible

AWS Reserved Instances are approximately 40% less expensive than On-Demand Instances, based on the hourly rate.

On-demand is seen as attractive as users feel as if they are only paying for what they use, whereas reserved instances require a commitment to pay for an amount of capacity over a period of time.

The challenge is to ensure that the reserved instance is appropriately sized (i.e. there is minimum unused capacity), but it is worth the effort!

There are some factors to consider when using Reserved Instances:

- Lower hourly rate, compared to On-Demand

- No need to pay upfront

- Predictable production (and dev/test) workloads lend themselves well to Reserved Instances

- Spare capacity can be sold as spot instances

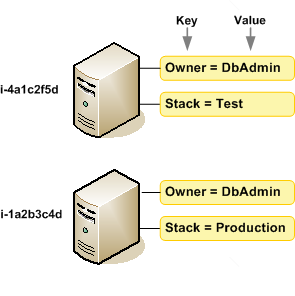

2. Ensure that instances are fully tagged

Using fields to "tag" what your cloud instances are doing and for whom allows cloud cost analysis. When cloud computing bills are analysed it is common place for a proportion of those bills to not be attributable to cost centres or users.

At one client, 14% of their cloud costs could not be attributed as the cloud resources had been "spun up" without any description of who the owner was, purpose, role of the resource or what the environment was.

Tags are "metadata" that are free form and have no meaning other than to the customer for cost re-attribution. The cloud provider can send monthly bills with the tags to allow for cost analysis and control.

A recommended list of tags are as follows:

- mp:environment (e.g. production-eu-west-1)

- mp:service (e.g. MPM-Dev)

- Resource type (e.g. m3.xlarge)

- Usage type (e.g. Instance)

- Price model (e.g. OnDemand, Reservation)

- mp:server-role (e.g. app, db, control)

- Sub type (e.g. Windows, Linux/Unix, r3.2xlarge)

- Operation (e.g. RunInstances, IOWrite)

- Total cost

These tags can be reported through cloud monitoring tools and should be thought of as a pre-requisite for such tools.

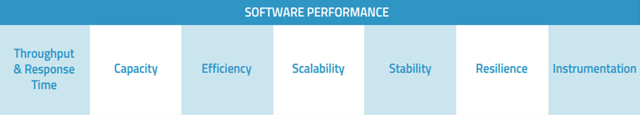

3. Plan for all of the 7 Pillars of Performance

Planning for cloud services isn't as simple as ensuring there is the right amount of Capacity.

At Capacitas we have define the 7 Pillars of Software Performance:

In the cloud we still need to consider the requirements around the remaining 6 pillars.

For example, unreliable interface availability (Resilience) can dramatically affect the throughput of the core system. Also, we also need to understand how the system can scale with increasing demand (Scalability).

The Instrumentation to manage system performance should covers all of the pillars of performance. Instrumentation should addressed early in the systems development life cycle.

A word of warning re. Capacity - Deploying capacity on demand or "playing safe" with over sized reserved instances is the same old-fashioned method as "let's sure we have plenty of tin". More often than not, we find that Capacity is oversized.

4. Keep tight control of non-production environments

Have a procedure for managing the use and costs of cloud-based development and test environments.

Justification for using on-demand services is largely driven by the need for short-term test and development requirements, however in practice, they proliferate due to:

- Ease of access

- Lack of ownership

A number of steps can be taken to reduce costs:

- Adopt a multi-product/customer model for development and test (i.e. do not have a separate environment for each product/service/customer)

- This can reduce costs by 80%

- Restrict the authority for starting up instances and for dialing up capacity

- Ensure that metadata tags are in place when starting up instances

- Have a regular "maintenance sweep" to ensure that on-demand instances are being used as intended

5. Predict when scaling is required

Rather than rely on the auto scaling functionality of on-demand instances, it is more cost effective to predict when capacity is required and use reserved instances instead.

In addition, sub-optimal auto scaling configurations may result in insufficient capacity, following sharp increases in demand.

With this in mind AWS offer a pre-warming service to ensure that the instance scales. If the requirements are known ahead of time then consider reserved instances to reduce costs.

Cloud capacity management methodologies such as workload characterisation, business demand driven capacity forecasting, capacity modelling, etc. are all still salient in the environment of cloud computing.